Delegated Drift

Something has been on my mind lately about artificial intelligence and its practicality. There’s this growing assumption—especially among those who hold the capital—that if we just replace human labor with automation, things will get better. Efficiency will go up. Output will improve. But I keep circling back to a more fundamental question:

How did we get here?

The Cycle of Delegation

People have always worked. Whether for survival, dignity, or duty, work has shaped how we view ourselves and each other. Over time, we figured out how to offload the tasks we didn’t want to do. This is delegation. It’s the natural response to boredom, burnout, or higher priorities.

Before AI and even before widespread automation, this usually meant handing off work to another person. You train them, give them just enough of the picture to get it done, and move on. Then they do the same. Eventually, the task is being executed far removed from the original context.

And here’s the problem: work loses its meaning when it’s too far from its purpose. What started as a necessary task becomes ritualized. Something we do simply because it was always done that way.

Over time, this creates what I’d call “delegation debt”—a kind of conceptual drift where the task continues to exist even after its usefulness has expired. We stop asking why, and just focus on how.

The AI Tipping Point

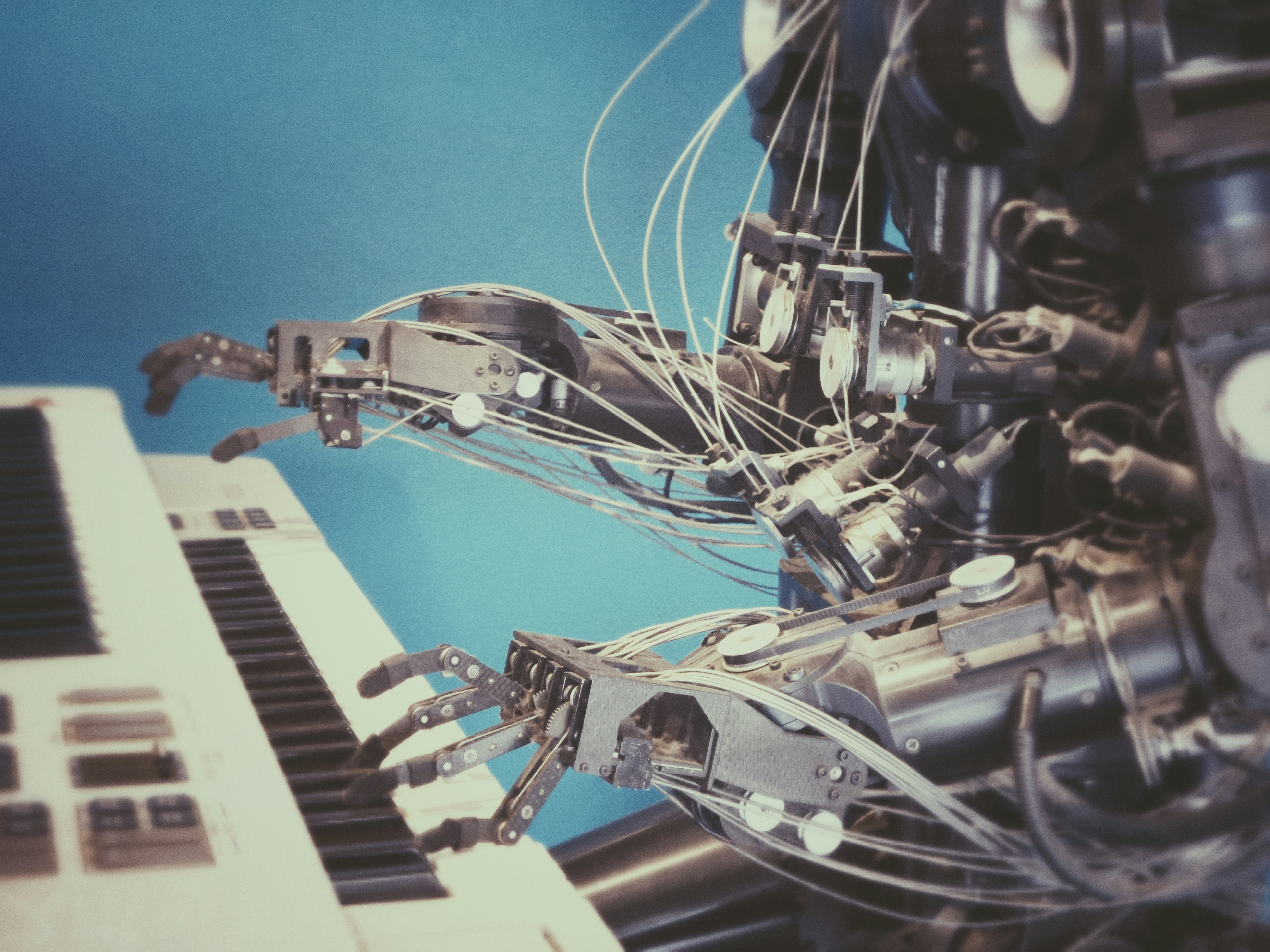

Enter AI. Now we’re not just delegating to people, we’re delegating to machines. Fast. At scale. We’re automating everything from document classification to decision-making, often without re-evaluating if the underlying task still matters. We’re cleaning house, but nobody’s checking if the furniture still belongs there.

And AI, by design, always produces something. It doesn’t evaluate whether a task is worth doing—it just executes. That’s what it was trained to do. Even if the task is unnecessary, the model will still deliver an output, a response, a result. And in doing so, it gives the illusion of progress.

In this new world, automation isn’t always solving real problems—it’s often just accelerating outdated processes. It’s like paving a road to a place no one lives anymore.

And this is where it starts to feel hollow. We celebrate optimization, but sometimes we’re just streamlining inefficiency. We’re applying powerful tools to brittle frameworks, expecting transformation but getting maintenance.

Work Without Meaning

What troubles me is not just the inefficiency—it’s the philosophical drift. If AI keeps doing the things we stopped questioning, what happens to human insight? What happens to reflection? If we no longer need to do the task, and no one stops to ask why it was there in the first place, we start building systems that run on autopilot toward nowhere.

And then what?

A Better Question

Maybe the real innovation isn’t just in teaching machines to do our work—but in using that technology as a mirror to ask: Is this work even worth doing anymore?

AI is a powerful force, but like all delegation, it comes with risk. The further we get from intention, the easier it is to lose the thread. So before we automate the next process, the next workflow, the next decision point—it might be worth stepping back and asking: Why does this exist? What would happen if we stopped doing it altogether? Not everything needs to be done better. Some things don’t need to be done at all.